Stats Bootcamp - class 11

Distributions and summary stats

RNA Bioscience Initiative | CU Anschutz

2025-10-20

Learning objectives

Learn types of variables

Calculate and visualize summary statistics

Properties of data distributions

Central limit theorem

Quantitative Variables

Discrete variable: numeric variables that have a countable number of values between any two values - integer in R (e.g., number of mice, read counts).

Continuous variable: numeric variables that have an infinite number of values between any two values - numeric in R (e.g., normalized expression values, fluorescent intensity).

Categorical Variables

Nominal variable: (unordered) random variables have categories where order doesn’t matter - factor in R (e.g., country, type of gene, genotype).

Ordinal variable: (ordered) random variables have ordered categories - order of levels in R ( e.g. grade of tumor).

Distributions and probabilities

A distribution in statistics is a function that shows the possible values for a variable and how often they occur.

We can visualize this with a histogram or density plots as we did earlier.

We are going to start with simulated data and then use Palmer Penguins later.

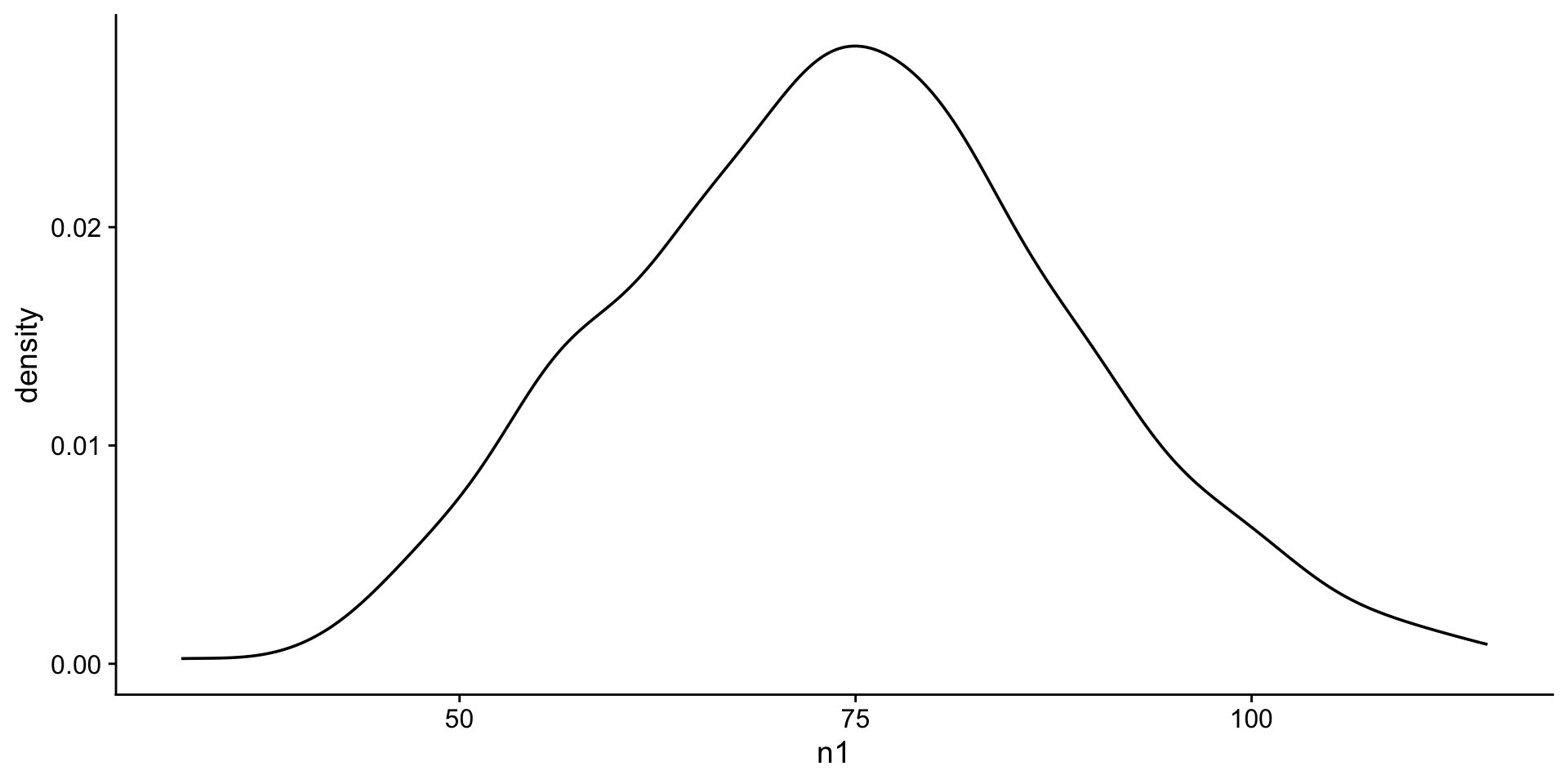

Create a normal distribution

Assume that the test scores of a college entrance exam fits a normal distribution. Furthermore, the mean test score is 76, and the standard deviation is 13.8.

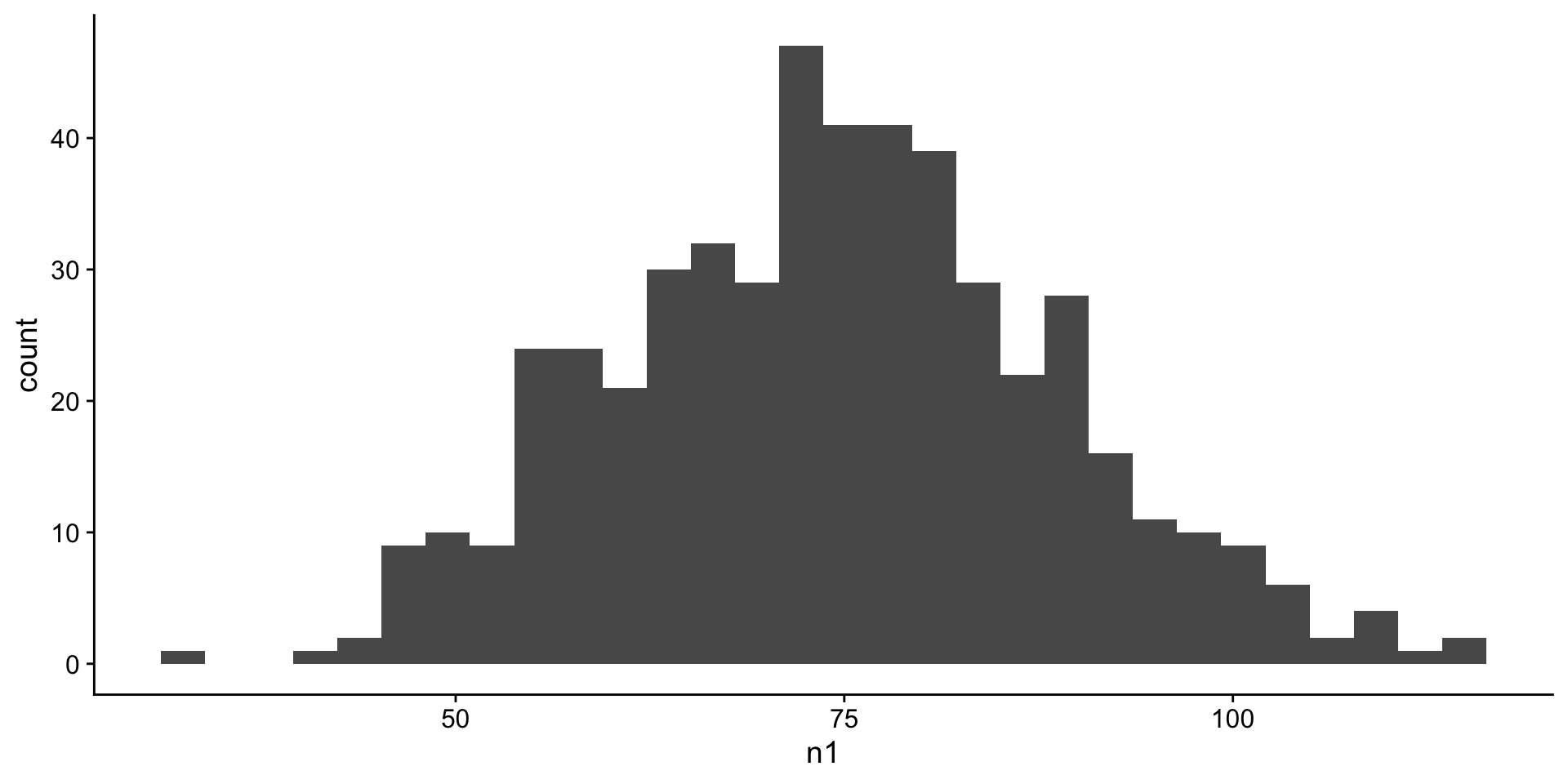

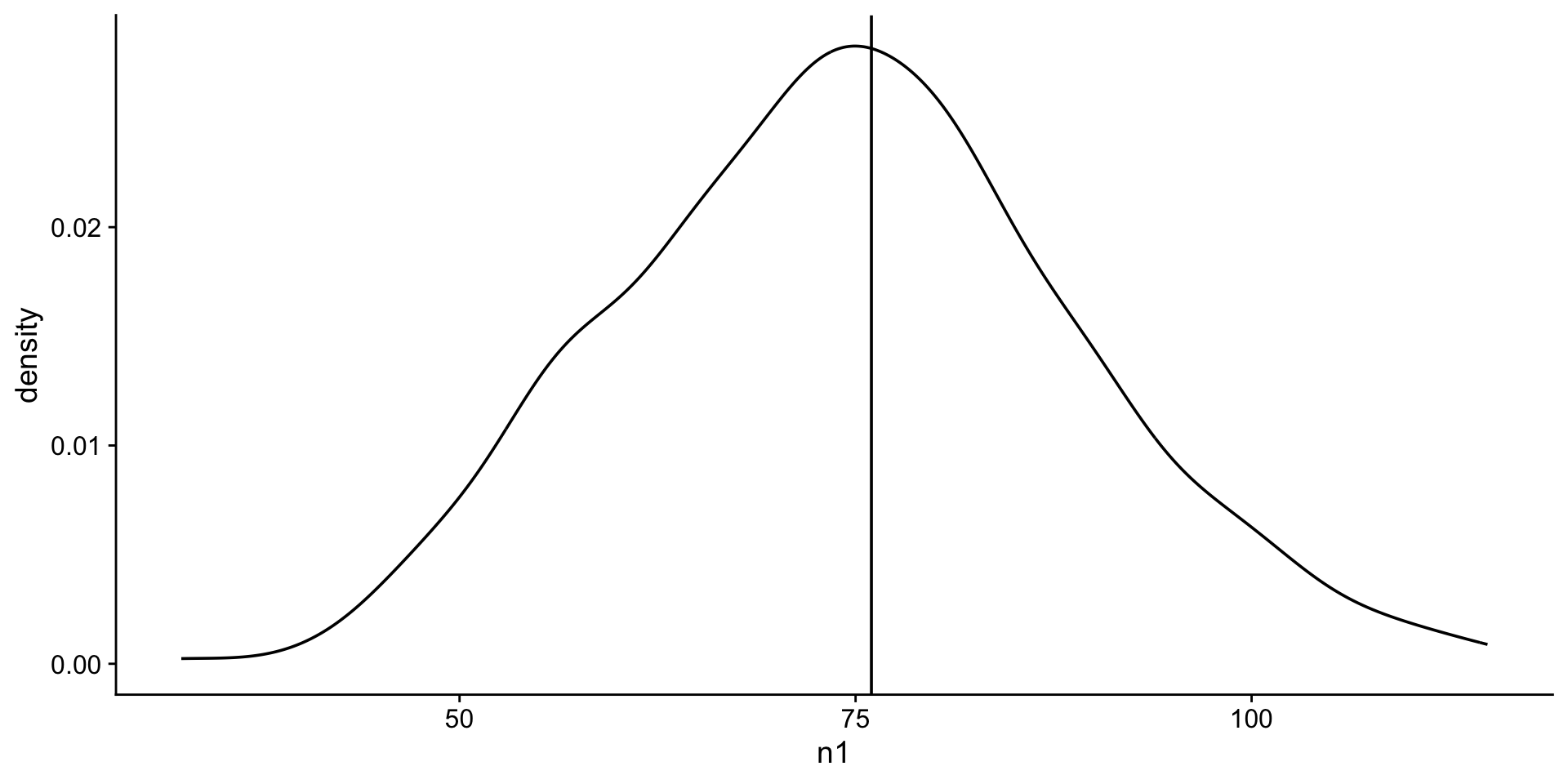

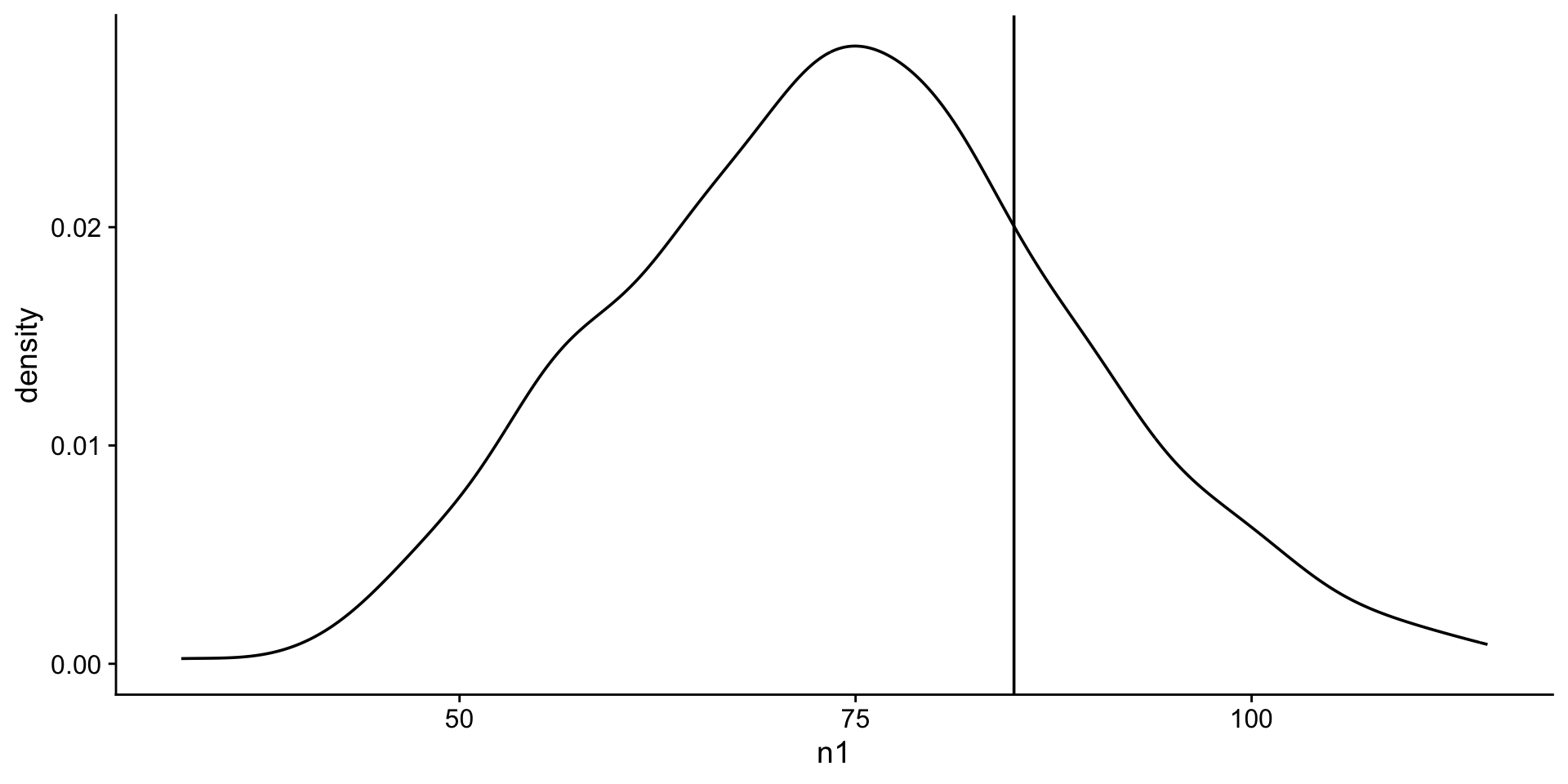

Visualize a normal distribution

first we will look at a histogram n1

next a density plot

Determine the probability of a given value

Probability is used to estimate how probable a sample is based on a given distribution.

Probability refers to the area under curve (AUC) on the distribution curve. The higher the value, the more probable that the data come from this distribution.

What is the probability of students scoring 85 or more in the exam?

Prob of 85 or more is equivalent to the area under the curve to the right of 85.

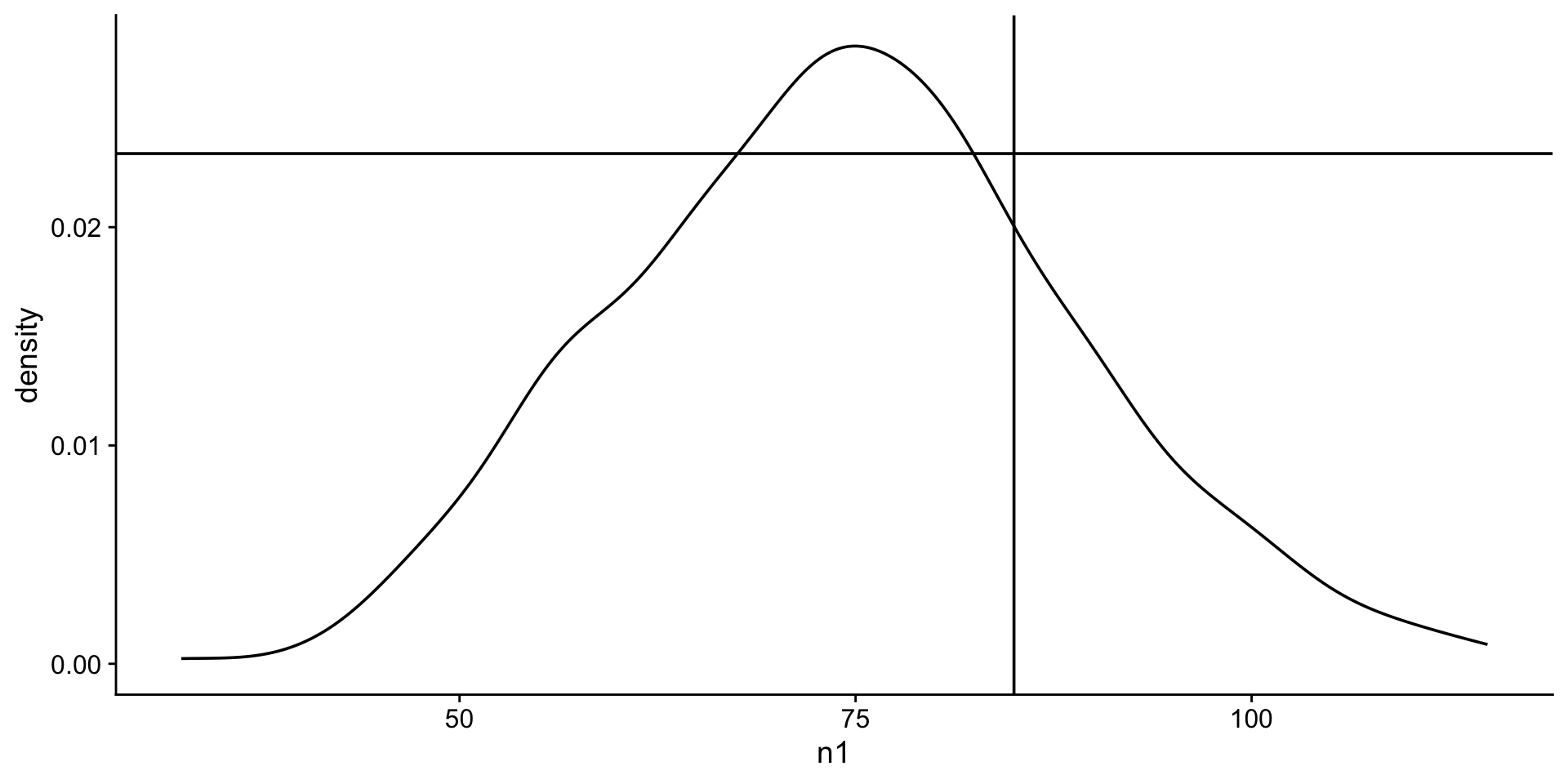

Determine the likelihood of a given value

Likelihood is used to estimate how good a model fits the data. Likelihood refers to a specific point on the distribution curve. The lower the likelihood, the worse the model fits the data.

What is the likelihood of students scoring 85 on the exam?

The likelihood is the y-axis value on the curve when th x-axis = 85.

Now to real (messy!) data

We will use the Palmer Penguins dataset

# A tibble: 344 × 17

studyName `Sample Number` Species Region Island Stage

<chr> <dbl> <chr> <chr> <chr> <chr>

1 PAL0708 1 Adelie Pen… Anvers Torge… Adul…

2 PAL0708 2 Adelie Pen… Anvers Torge… Adul…

3 PAL0708 3 Adelie Pen… Anvers Torge… Adul…

4 PAL0708 4 Adelie Pen… Anvers Torge… Adul…

5 PAL0708 5 Adelie Pen… Anvers Torge… Adul…

6 PAL0708 6 Adelie Pen… Anvers Torge… Adul…

7 PAL0708 7 Adelie Pen… Anvers Torge… Adul…

8 PAL0708 8 Adelie Pen… Anvers Torge… Adul…

9 PAL0708 9 Adelie Pen… Anvers Torge… Adul…

10 PAL0708 10 Adelie Pen… Anvers Torge… Adul…

# ℹ 334 more rows

# ℹ 11 more variables: `Individual ID` <chr>,

# `Clutch Completion` <chr>, `Date Egg` <date>,

# `Culmen Length (mm)` <dbl>, `Culmen Depth (mm)` <dbl>,

# `Flipper Length (mm)` <dbl>, `Body Mass (g)` <dbl>,

# Sex <chr>, `Delta 15 N (o/oo)` <dbl>,

# `Delta 13 C (o/oo)` <dbl>, Comments <chr>Yikes! Some of these column names have horrible formatting e.g. spaces, slashes, parenthesis. These characters can be misinterpreted by R. Also, long/wonky names makes coding annoying.

Let’s tidy the names

[1] "studyName" "Sample Number"

[3] "Species" "Region"

[5] "Island" "Stage"

[7] "Individual ID" "Clutch Completion"

[9] "Date Egg" "Culmen Length (mm)"

[11] "Culmen Depth (mm)" "Flipper Length (mm)"

[13] "Body Mass (g)" "Sex"

[15] "Delta 15 N (o/oo)" "Delta 13 C (o/oo)"

[17] "Comments" janitor package to the rescue.

[1] "study_name" "sample_number"

[3] "species" "region"

[5] "island" "stage"

[7] "individual_id" "clutch_completion"

[9] "date_egg" "culmen_length_mm"

[11] "culmen_depth_mm" "flipper_length_mm"

[13] "body_mass_g" "sex"

[15] "delta_15_n_o_oo" "delta_13_c_o_oo"

[17] "comments" Let’s inspect the data

tibble [344 × 15] (S3: tbl_df/tbl/data.frame)

$ study_name : chr [1:344] "PAL0708" "PAL0708" "PAL0708" "PAL0708" ...

$ sample_number : num [1:344] 1 2 3 4 5 6 7 8 9 10 ...

$ species : chr [1:344] "Adelie Penguin (Pygoscelis adeliae)" "Adelie Penguin (Pygoscelis adeliae)" "Adelie Penguin (Pygoscelis adeliae)" "Adelie Penguin (Pygoscelis adeliae)" ...

$ island : chr [1:344] "Torgersen" "Torgersen" "Torgersen" "Torgersen" ...

$ individual_id : chr [1:344] "N1A1" "N1A2" "N2A1" "N2A2" ...

$ clutch_completion: chr [1:344] "Yes" "Yes" "Yes" "Yes" ...

$ date_egg : Date[1:344], format: "2007-11-11" ...

$ culmen_length_mm : num [1:344] 39.1 39.5 40.3 NA 36.7 39.3 38.9 39.2 34.1 42 ...

$ culmen_depth_mm : num [1:344] 18.7 17.4 18 NA 19.3 20.6 17.8 19.6 18.1 20.2 ...

$ flipper_length_mm: num [1:344] 181 186 195 NA 193 190 181 195 193 190 ...

$ body_mass_g : num [1:344] 3750 3800 3250 NA 3450 ...

$ sex : chr [1:344] "MALE" "FEMALE" "FEMALE" NA ...

$ delta_15_n_o_oo : num [1:344] NA 8.95 8.37 NA 8.77 ...

$ delta_13_c_o_oo : num [1:344] NA -24.7 -25.3 NA -25.3 ...

$ comments : chr [1:344] "Not enough blood for isotopes." NA NA "Adult not sampled." ...

- attr(*, "spec")=

.. cols(

.. studyName = col_character(),

.. `Sample Number` = col_double(),

.. Species = col_character(),

.. Region = col_character(),

.. Island = col_character(),

.. Stage = col_character(),

.. `Individual ID` = col_character(),

.. `Clutch Completion` = col_character(),

.. `Date Egg` = col_date(format = ""),

.. `Culmen Length (mm)` = col_double(),

.. `Culmen Depth (mm)` = col_double(),

.. `Flipper Length (mm)` = col_double(),

.. `Body Mass (g)` = col_double(),

.. Sex = col_character(),

.. `Delta 15 N (o/oo)` = col_double(),

.. `Delta 13 C (o/oo)` = col_double(),

.. Comments = col_character()

.. )Let’s select a few of these columns to keep and get rid of NAs

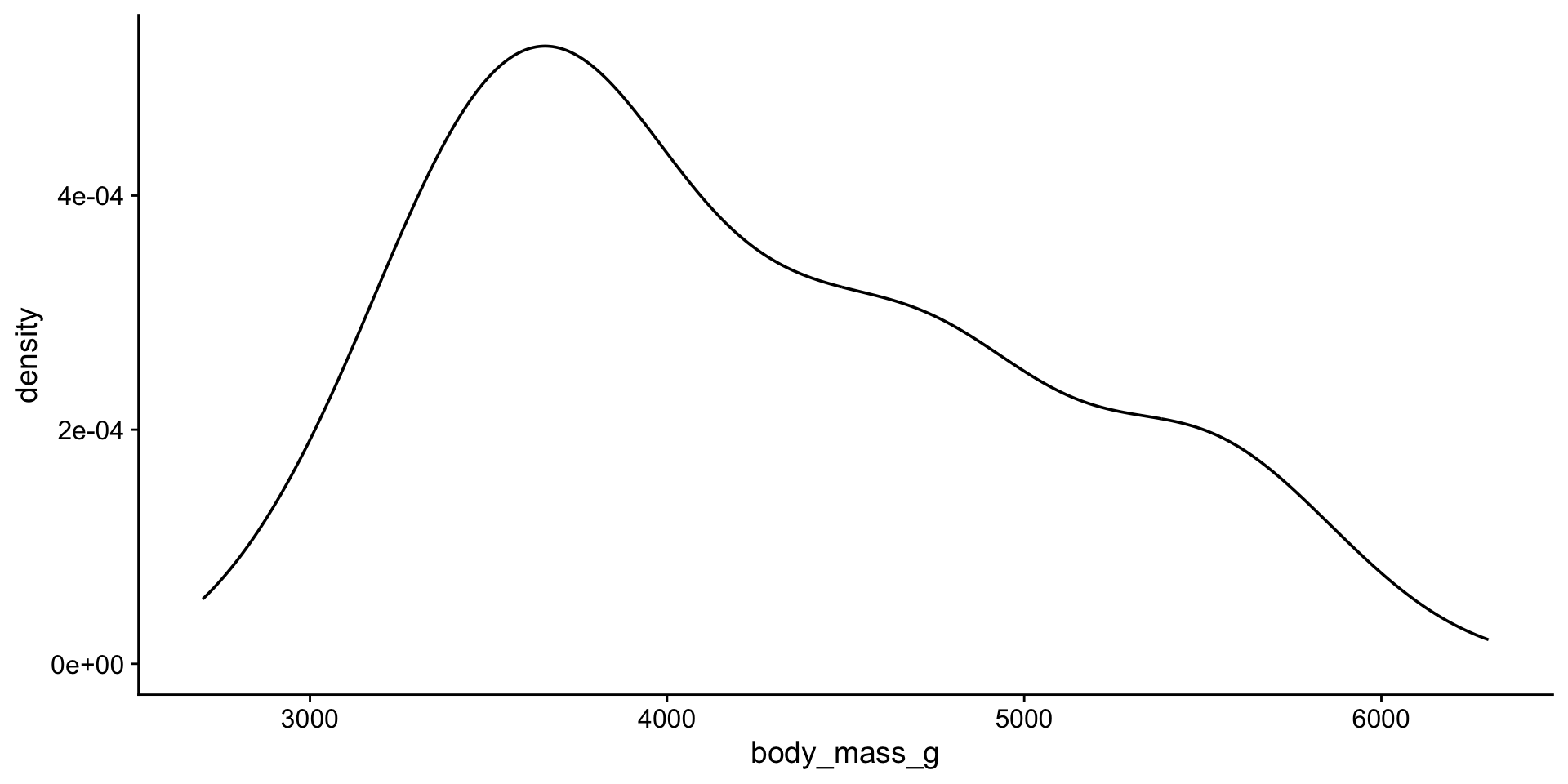

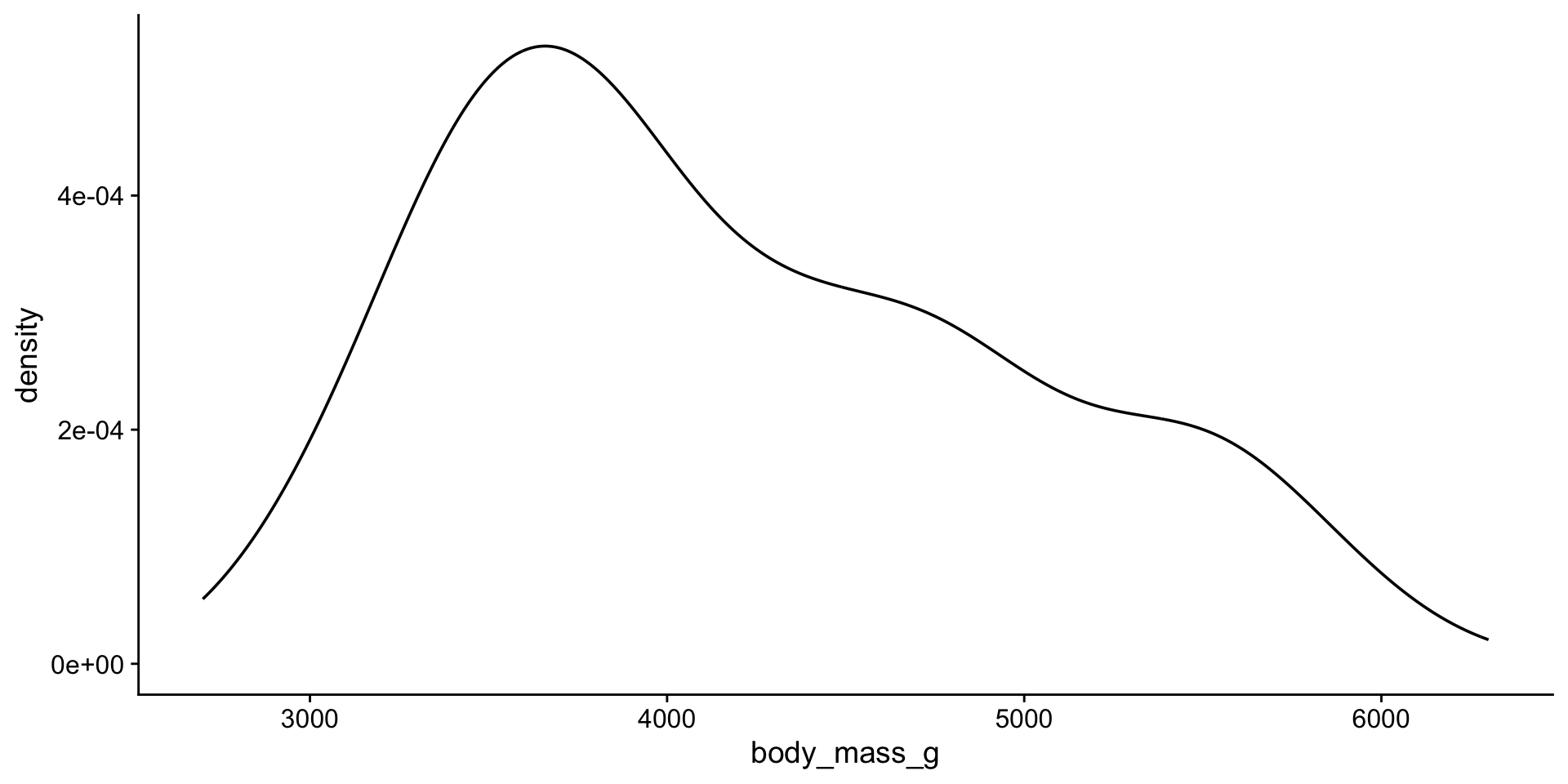

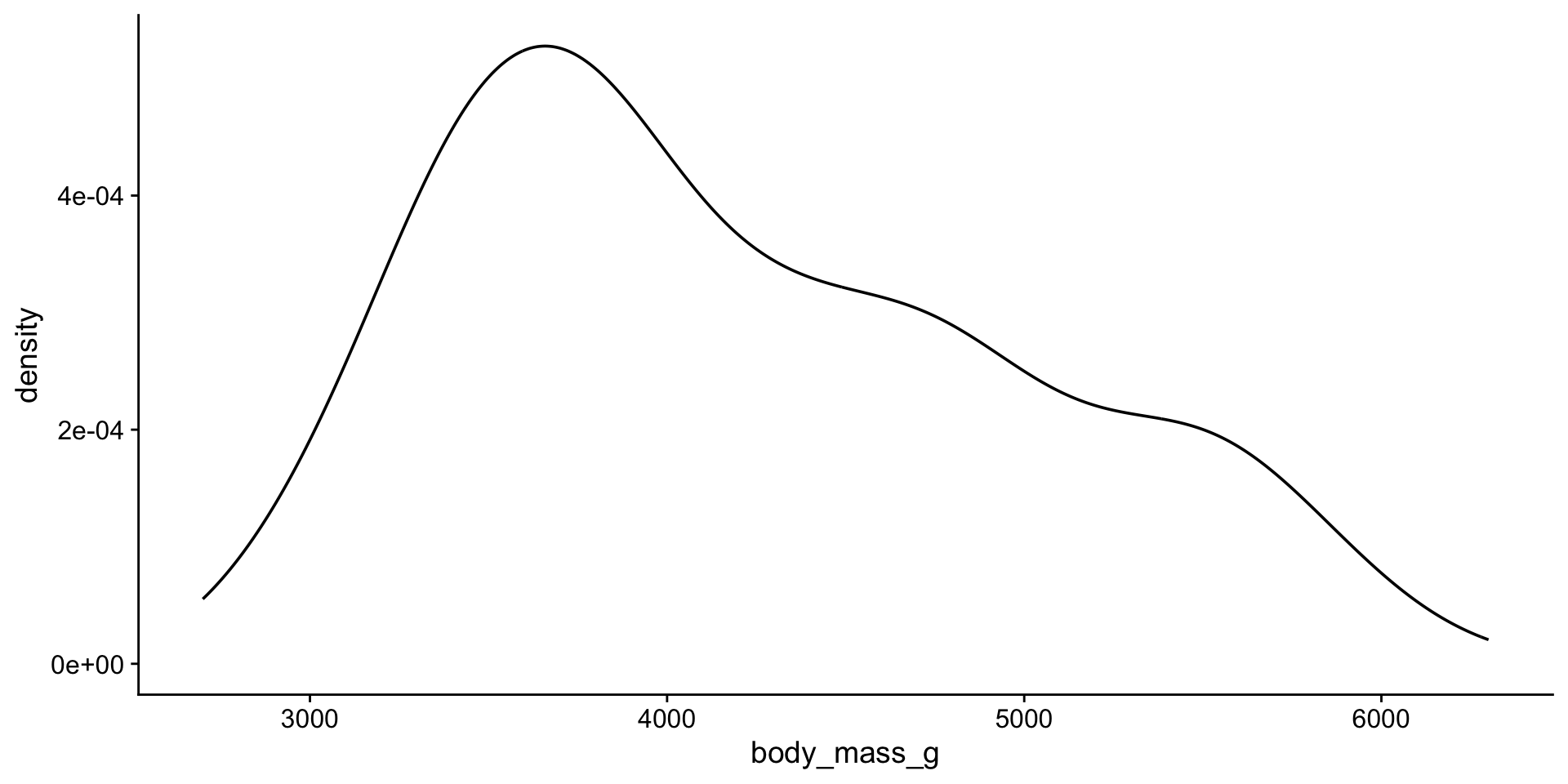

Visualizing quantitative variables

histogram

density plot

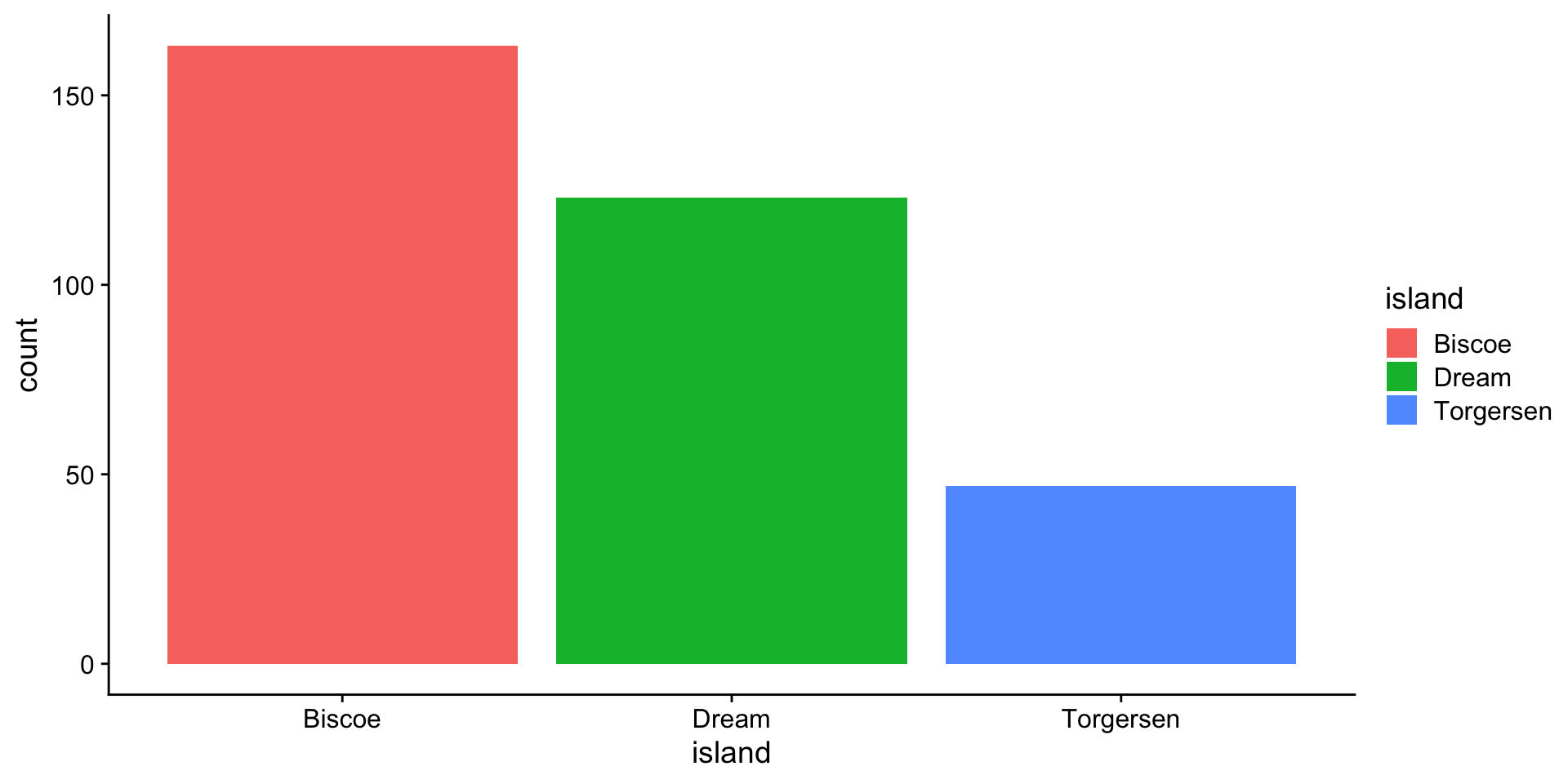

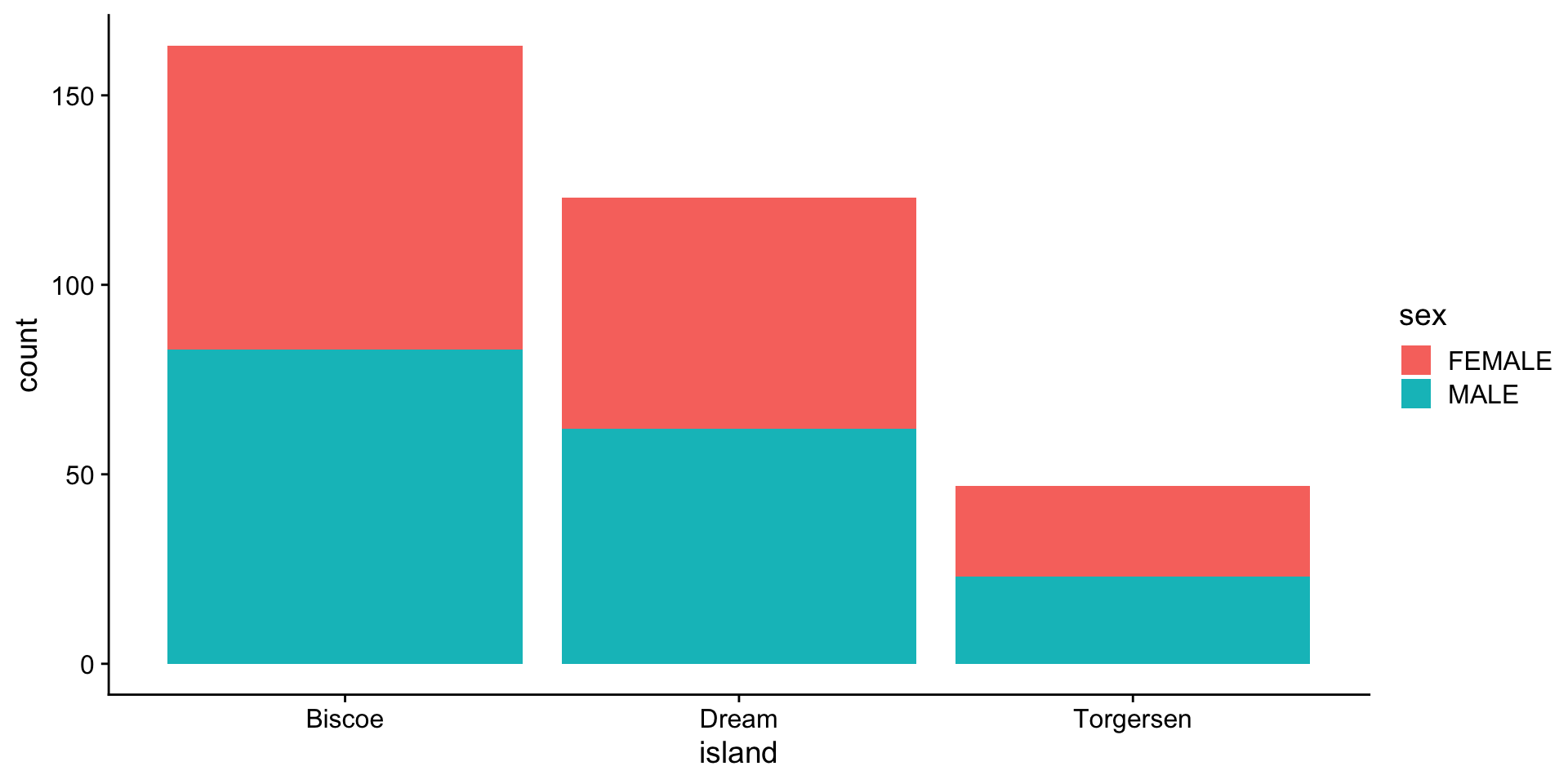

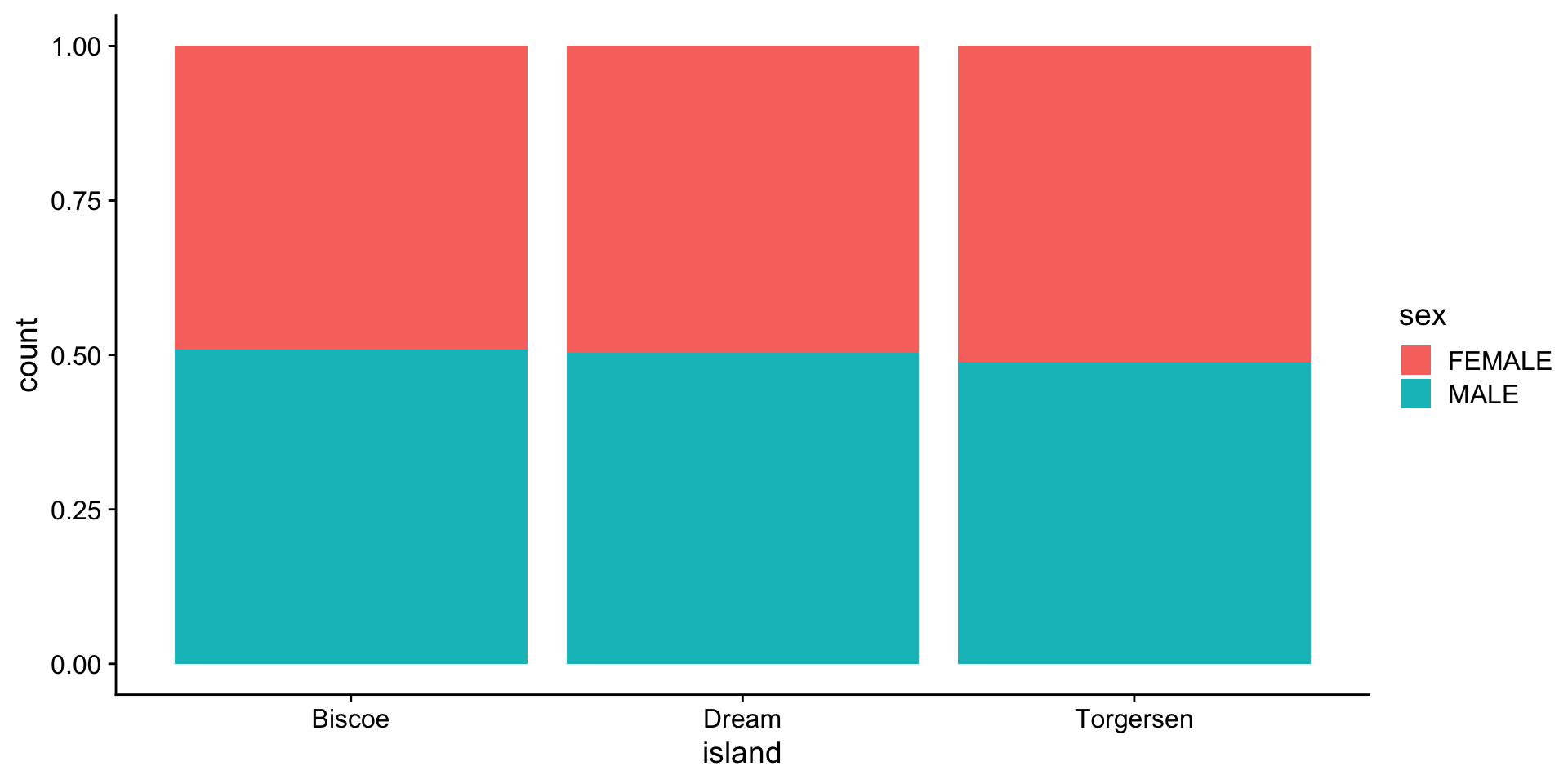

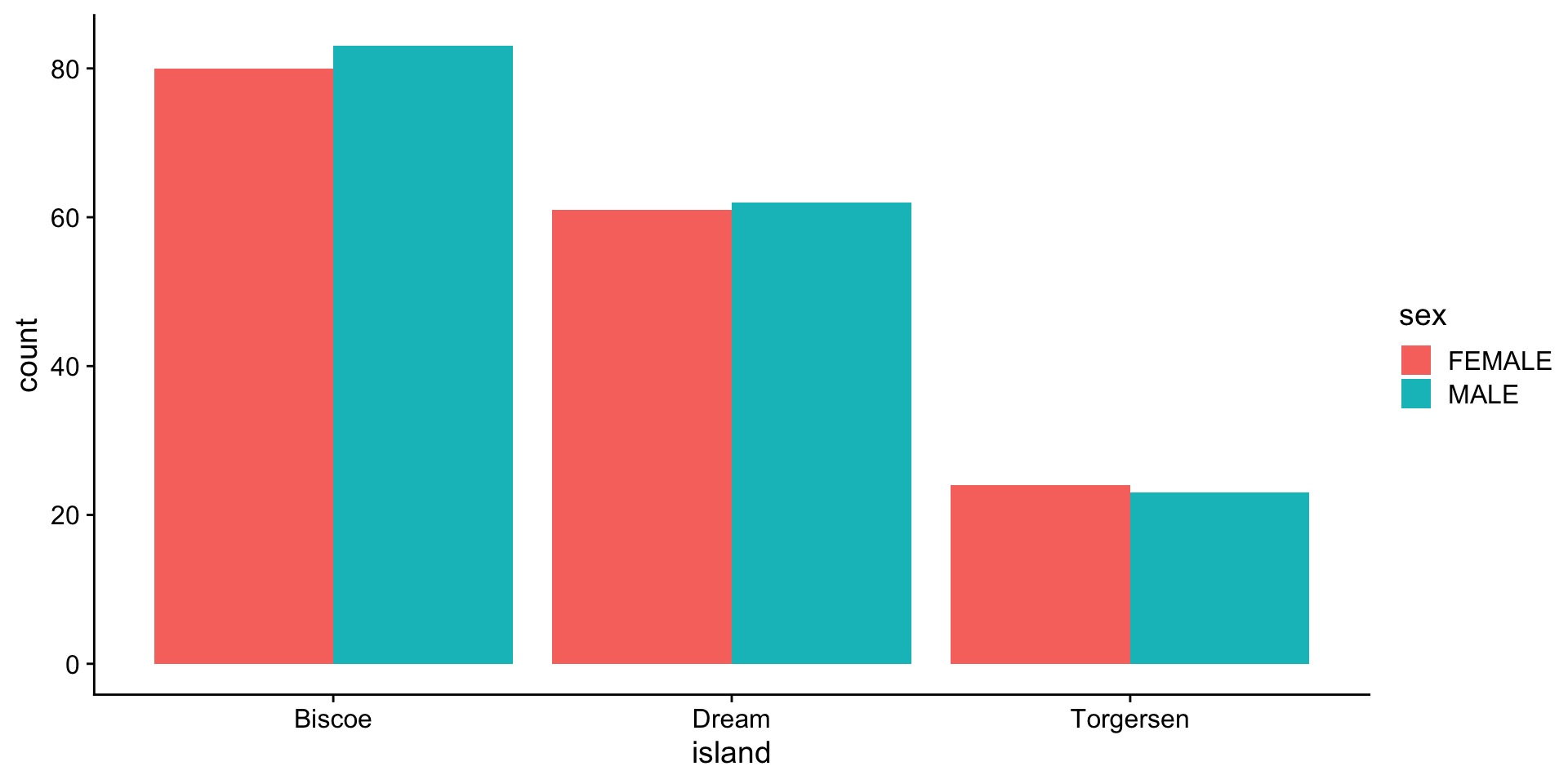

Visualizing categorical variables

barplot - 1 category

barplot - categories (island vs sex)

stacked:

proportion

per category

Descriptive statistics for continuous data

n: # observations/individuals or sample sizemean (\(\mu\)): sum of all observations divided by # of observations, \(\mu = \displaystyle \frac {\sum x_i} {n}\)

median: the “middle” value of a data set. Not as sensitive to outliers as the mean.

![]()

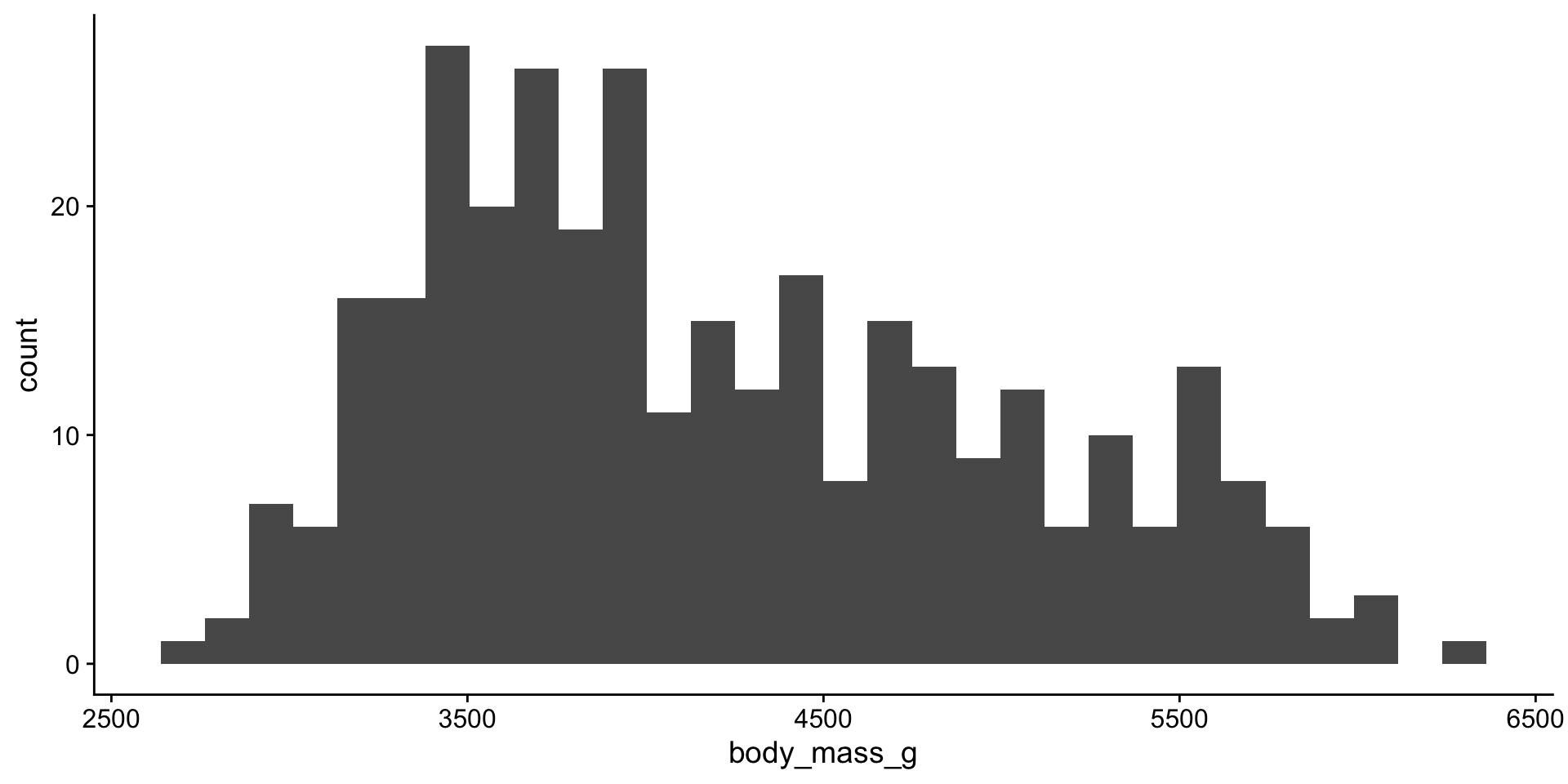

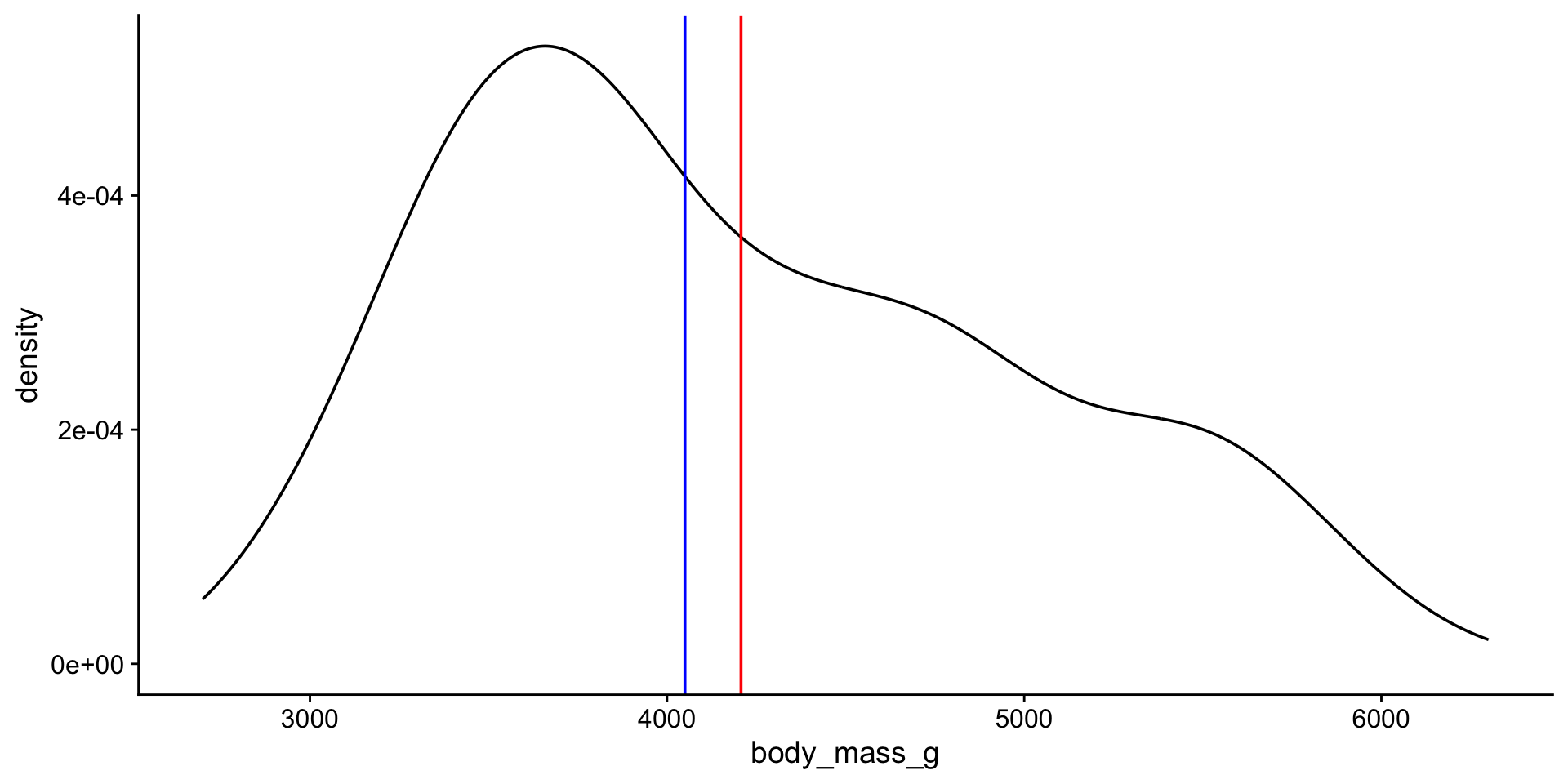

Descriptive statistics for body weight

Let’s look at the distribution again

viz mean + median

Other descriptive statistics

Min: minimum value.

Max: maximum value.

q1, q3: the first and the third quartile, respectively.

IQR: interquartile range measures the spread of the middle half of your data (q3-q1).

Quick way to get all these stats:

# A tibble: 1 × 10

variable n min max median iqr mean sd se

<fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 body_mas… 333 2700 6300 4050 1225 4207. 805. 44.1

# ℹ 1 more variable: ci <dbl>Statistics describing spread of values

Variance: the average of the squared differences from the mean

\(\sigma^2 = \displaystyle \frac {\sum (x_{i} - \mu)^2}{n}\)

Standard Deviation: square root of the variance

\(\sigma = \sqrt {\displaystyle \frac {\sum (x_{i} - \mu)^2}{n}}\)

The variance measures the mathematical dispersion of the data relative to the mean. However, it is more difficult to apply in a real-world sense because the values used to calculate it were squared. The standard deviation, as the square root of the variance, is in the same units as the original values, which makes it much easier to work with and interpret w/respect to the mean.

Other stats describing spread of data

Confidence Interval (ci): a range of values that you can be 95% (or x%) certain contains the true population mean. Gets into inferential statistics.

Get more descriptive stats easily

# A tibble: 1 × 5

variable n mean median sd

<fct> <dbl> <dbl> <dbl> <dbl>

1 body_mass_g 333 4207. 4050 805.by species

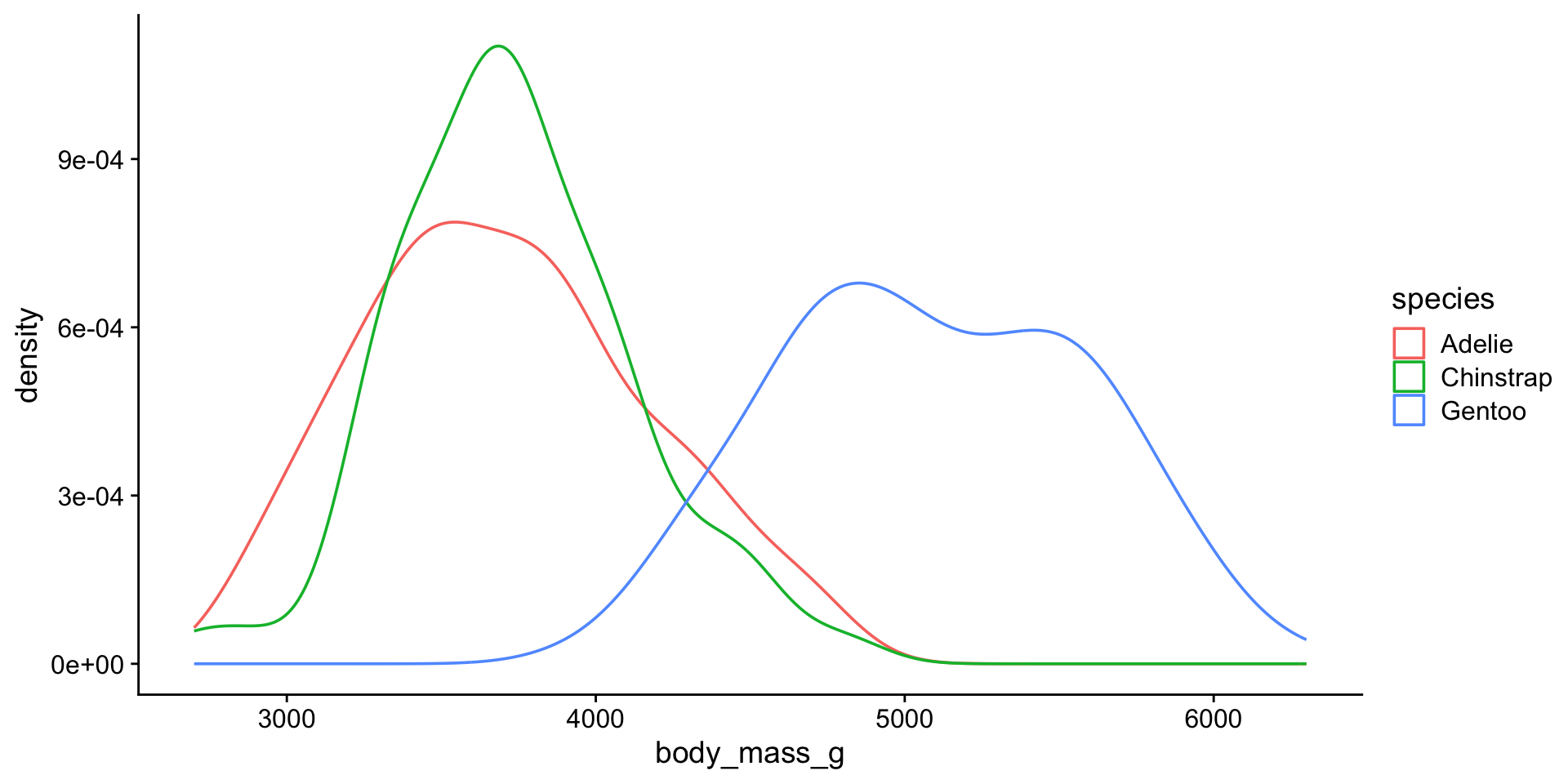

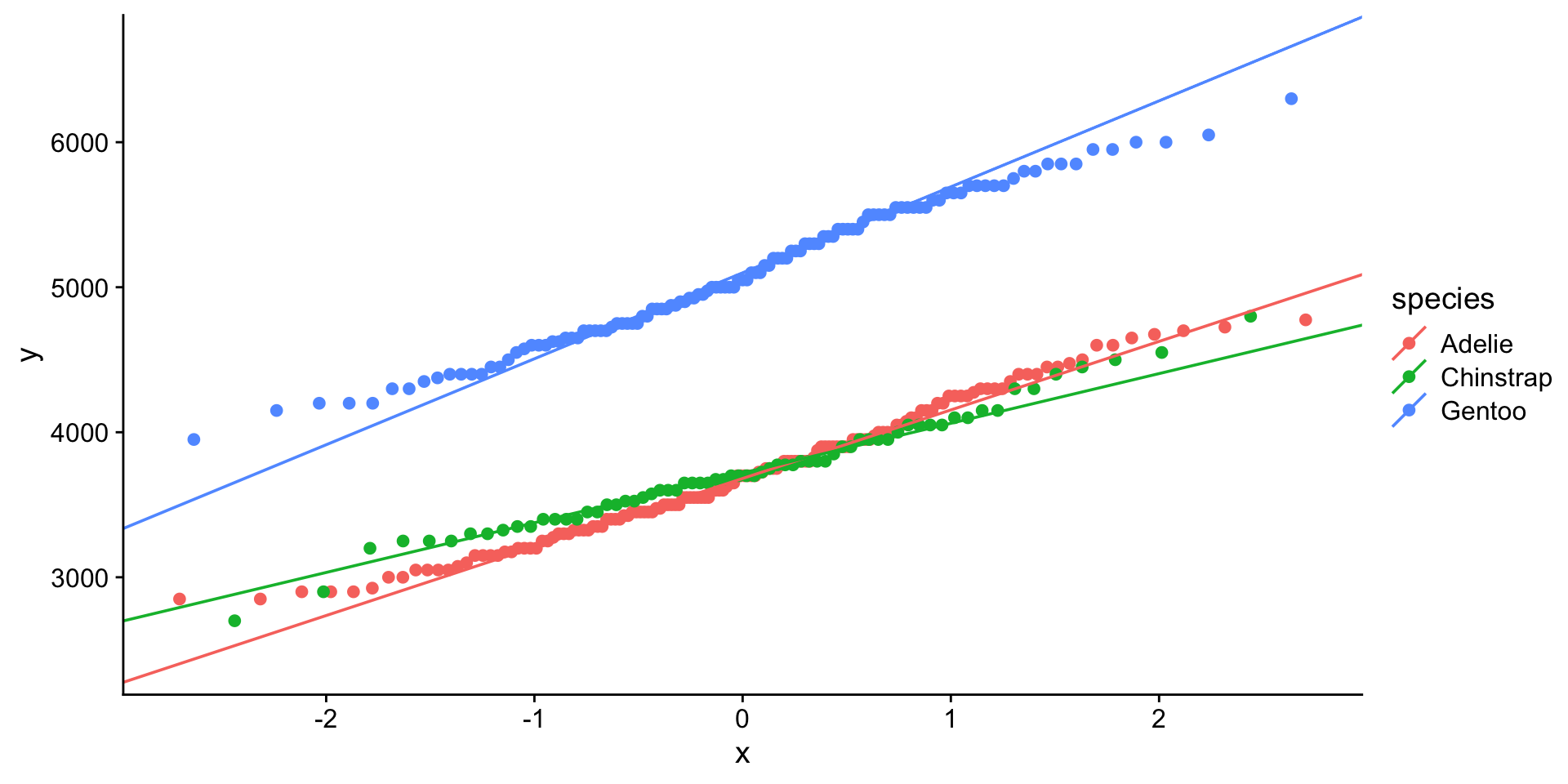

# A tibble: 3 × 6

species variable n mean median sd

<chr> <fct> <dbl> <dbl> <dbl> <dbl>

1 Adelie body_mass_g 146 3706. 3700 459.

2 Chinstrap body_mass_g 68 3733. 3700 384.

3 Gentoo body_mass_g 119 5092. 5050 501.by species and island

# A tibble: 5 × 7

species island variable n mean median sd

<chr> <chr> <fct> <dbl> <dbl> <dbl> <dbl>

1 Adelie Biscoe body_mass_g 44 3710. 3750 488.

2 Adelie Dream body_mass_g 55 3701. 3600 449.

3 Adelie Torgersen body_mass_g 47 3709. 3700 452.

4 Chinstrap Dream body_mass_g 68 3733. 3700 384.

5 Gentoo Biscoe body_mass_g 119 5092. 5050 501.Normal distribution

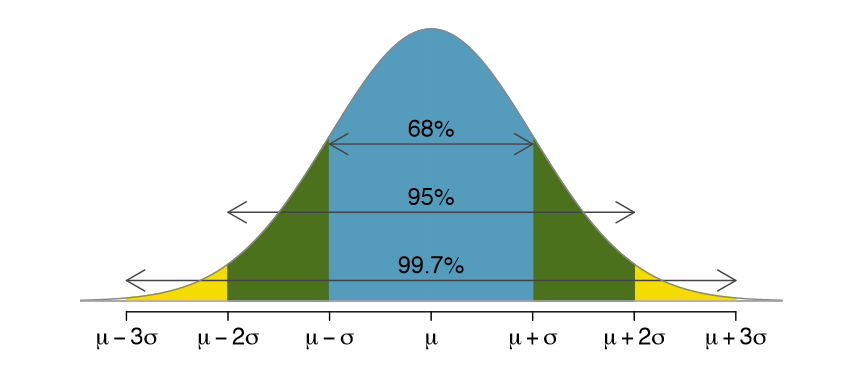

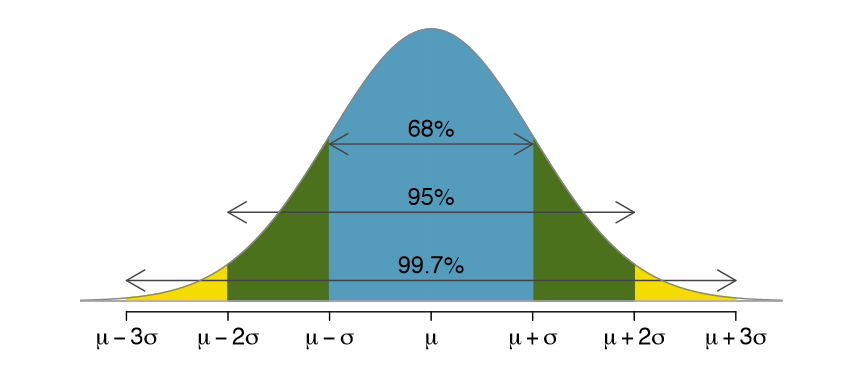

The mean, mode, and median are all equal.

The distribution is symmetric about the mean—half the values fall below the mean and half above the mean.

The distribution can be described by two values: the mean and the standard deviation.

Bell curve or standard normal:

Is a special normal distribution where the mean is 0 and the standard deviation is 1.

Normal distribution metrics

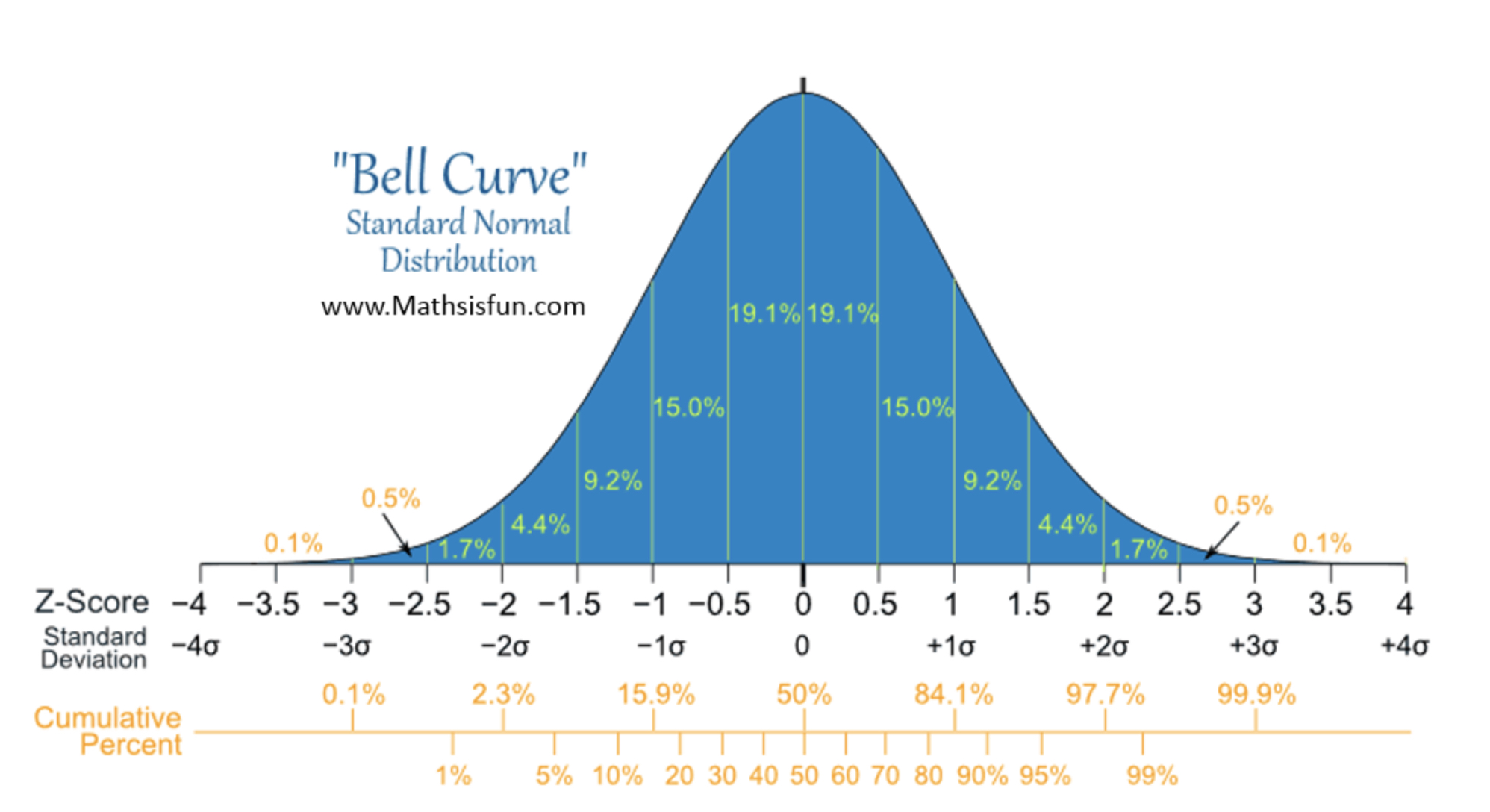

Skewness is a measure of the asymmetry around the mean. 0 for bell curve.

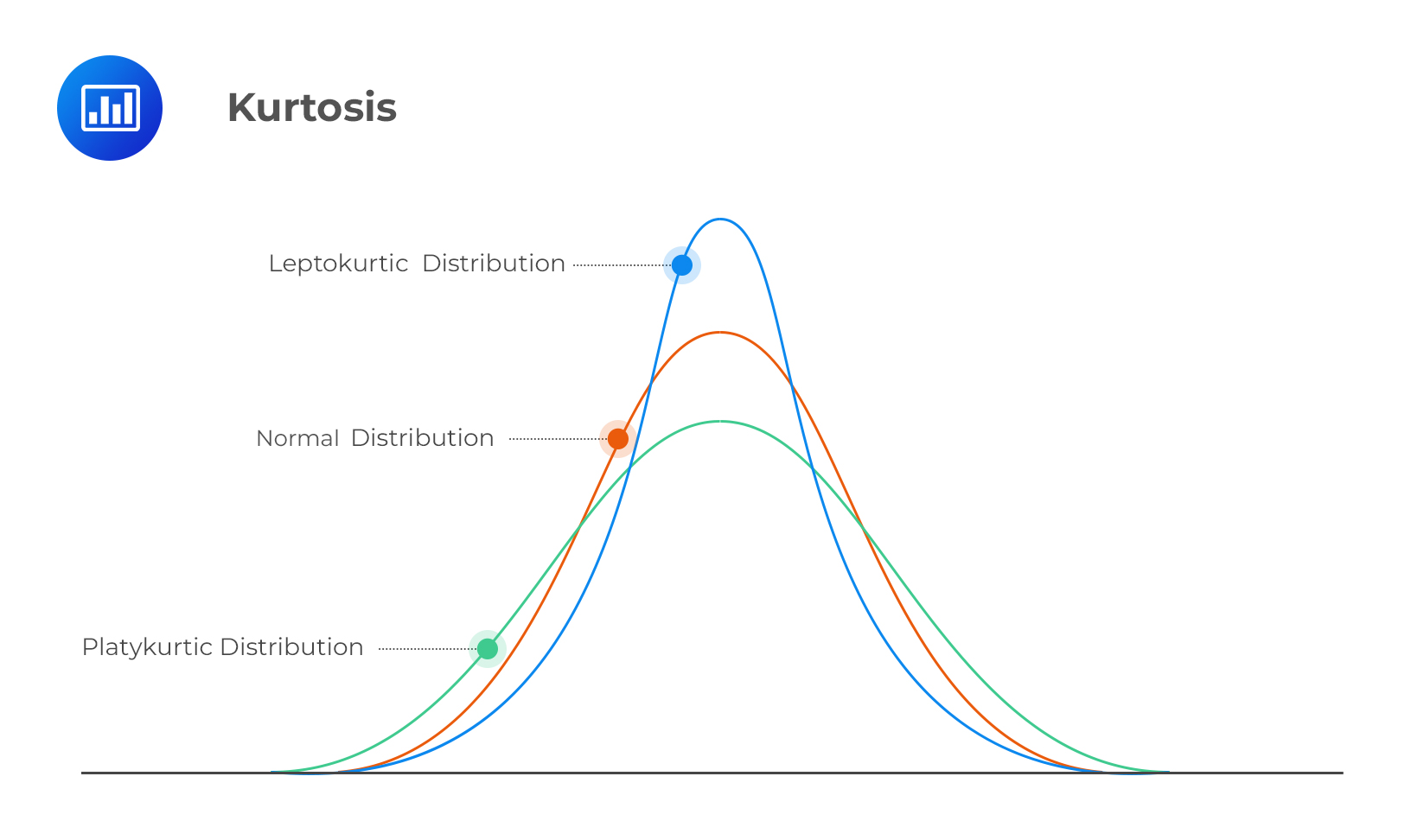

Normal distribution metrics

Kurtosis is a measure of the “flatness” of the distribution.

Is my data normal(ly distributed)?

Let’s look at the test score distribution again

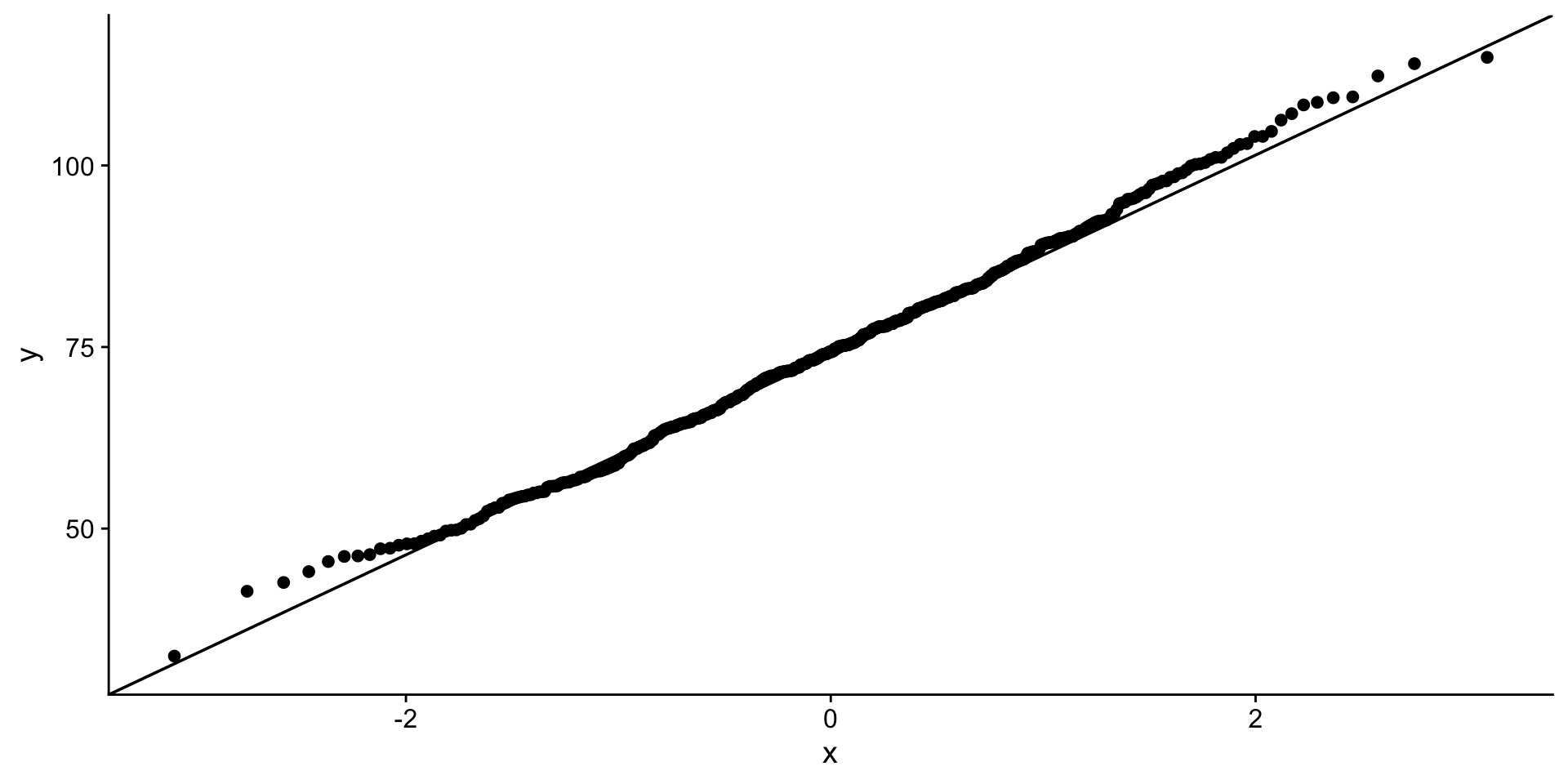

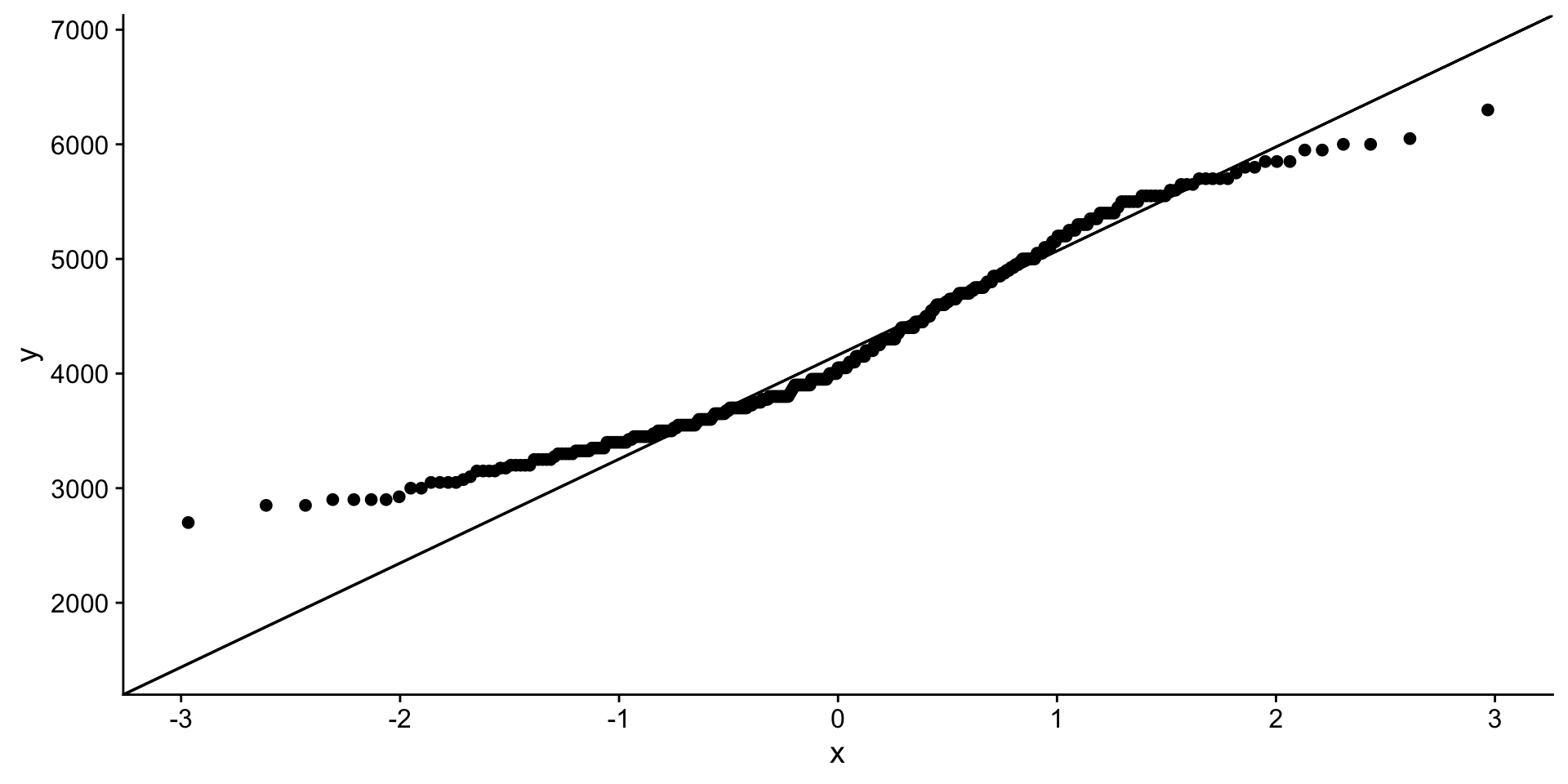

QQ-plot

quantile-quantile plot to compare an empirical distribution to a theoretical distribution.

Quantile is the fraction (or percent) of points below the given value. For example, the 0.2 (or 20%) quantile is the point at which 20% percent of the data fall below and 80% fall above that value.

Shapiro-Wilk Normality Test

Shapiro-Wilk test is a hypothesis test that evaluates whether a data set is normally distributed. /

It evaluates data from a sample with the null hypothesis that the data set is normally distributed. /

A large p-value indicates the data set is normally distributed, a low p-value indicates that it isn’t normally distributed.

Back to penguin body mass

Distribution

QQ-plot body mass

Hmmm…

Shapiro-Wilk body mass

# A tibble: 1 × 3

variable statistic p

<chr> <dbl> <dbl>

1 body_mass_g 0.958 0.0000000357That does not look normal!

Penguin body mass by species?

Distribution

QQ-plot body weight

That looks better…

Shapiro-Wilk body weight

# A tibble: 3 × 4

species variable statistic p

<chr> <chr> <dbl> <dbl>

1 Adelie body_mass_g 0.981 0.0423

2 Chinstrap body_mass_g 0.984 0.561

3 Gentoo body_mass_g 0.986 0.261 Ok so Chinstrap and Gentoo look normal. Not sure about Adelie. We may want to consider using non-parametric test to compare mean body weights between Adelie vs Chinstrap or Gentoo.

Central limit theorem

The central limit theorem states that if you take sufficiently large samples from a population, the samples’ means will be normally distributed, even if the population isn’t normally distributed.

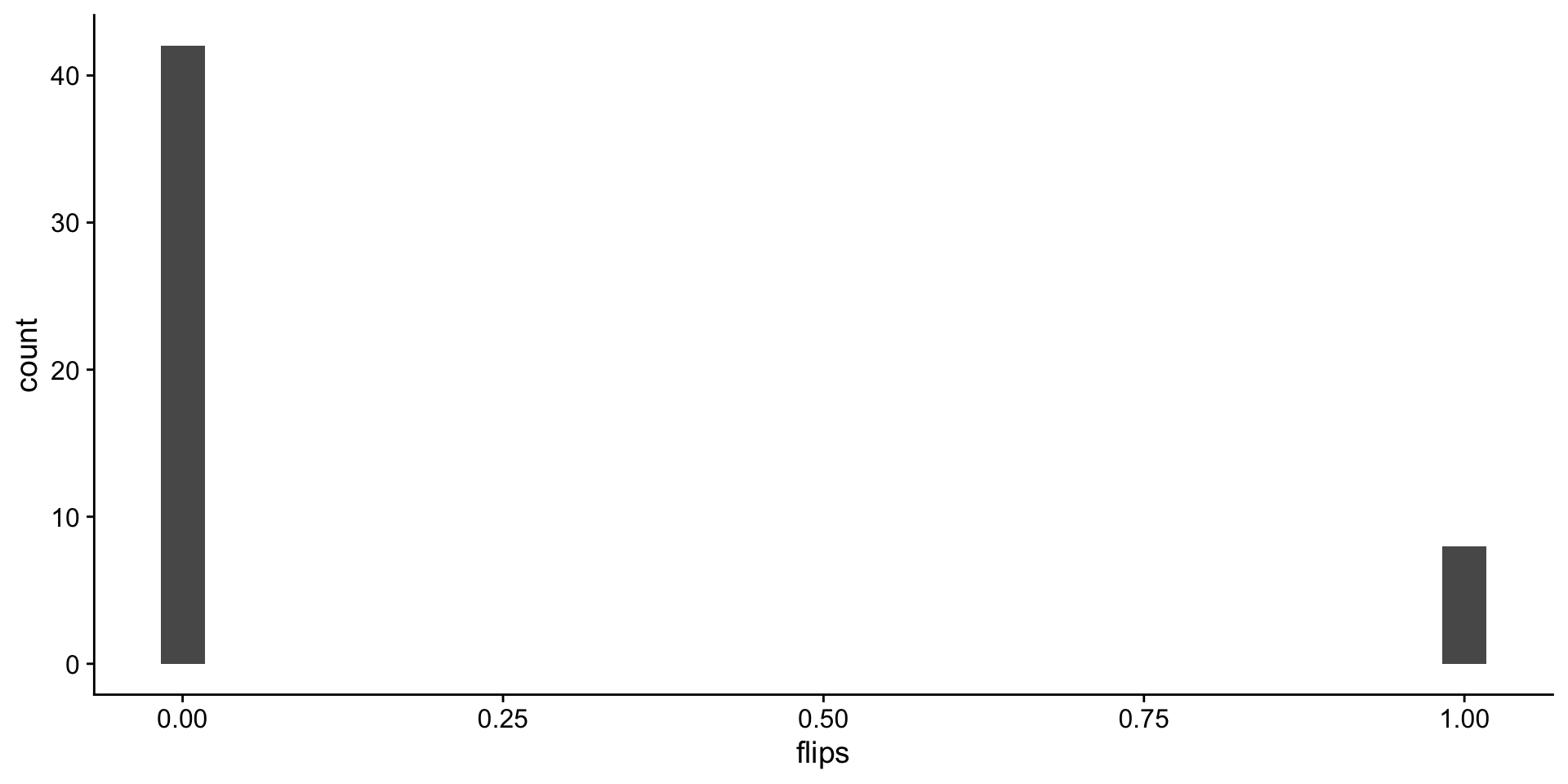

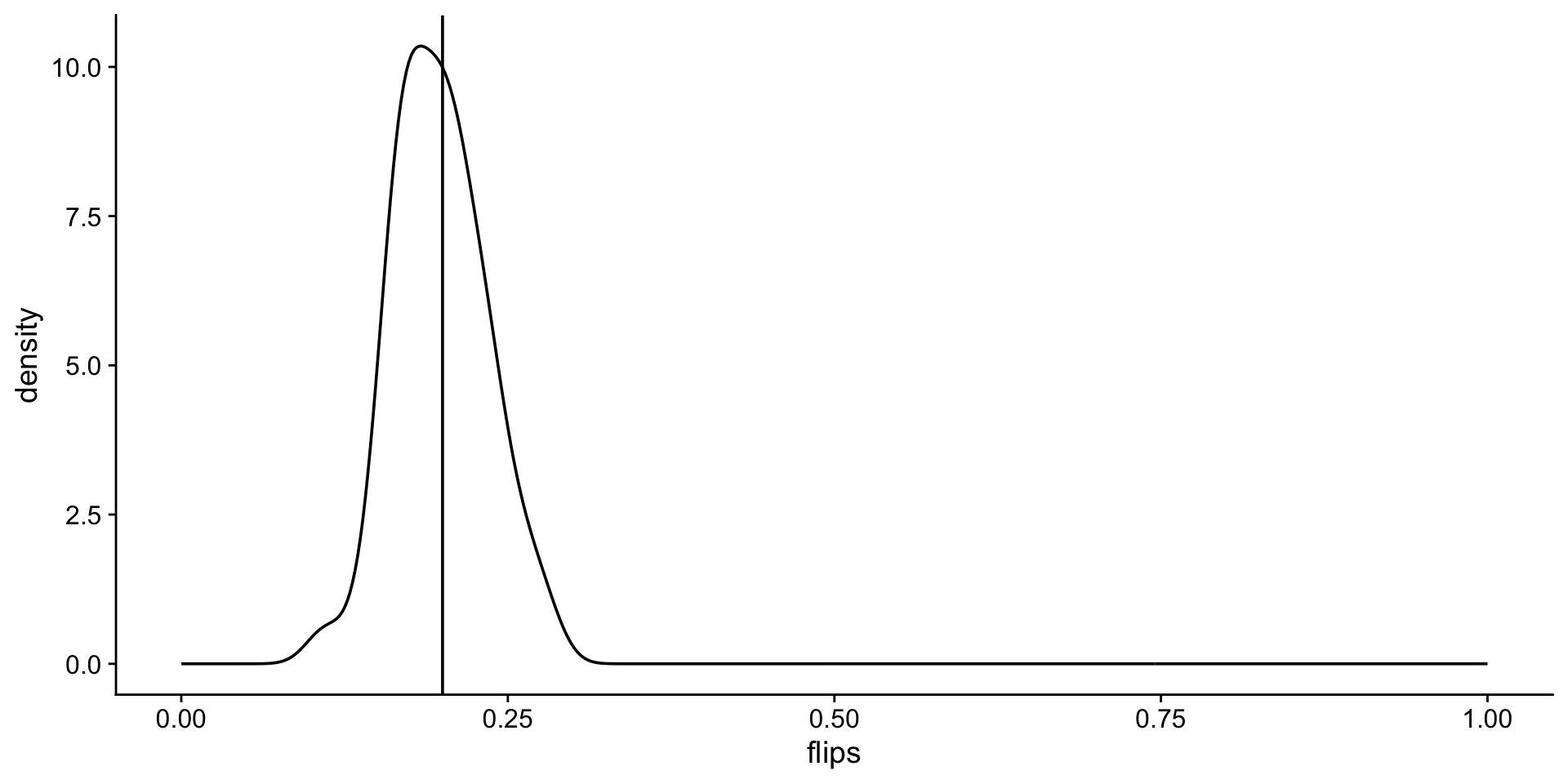

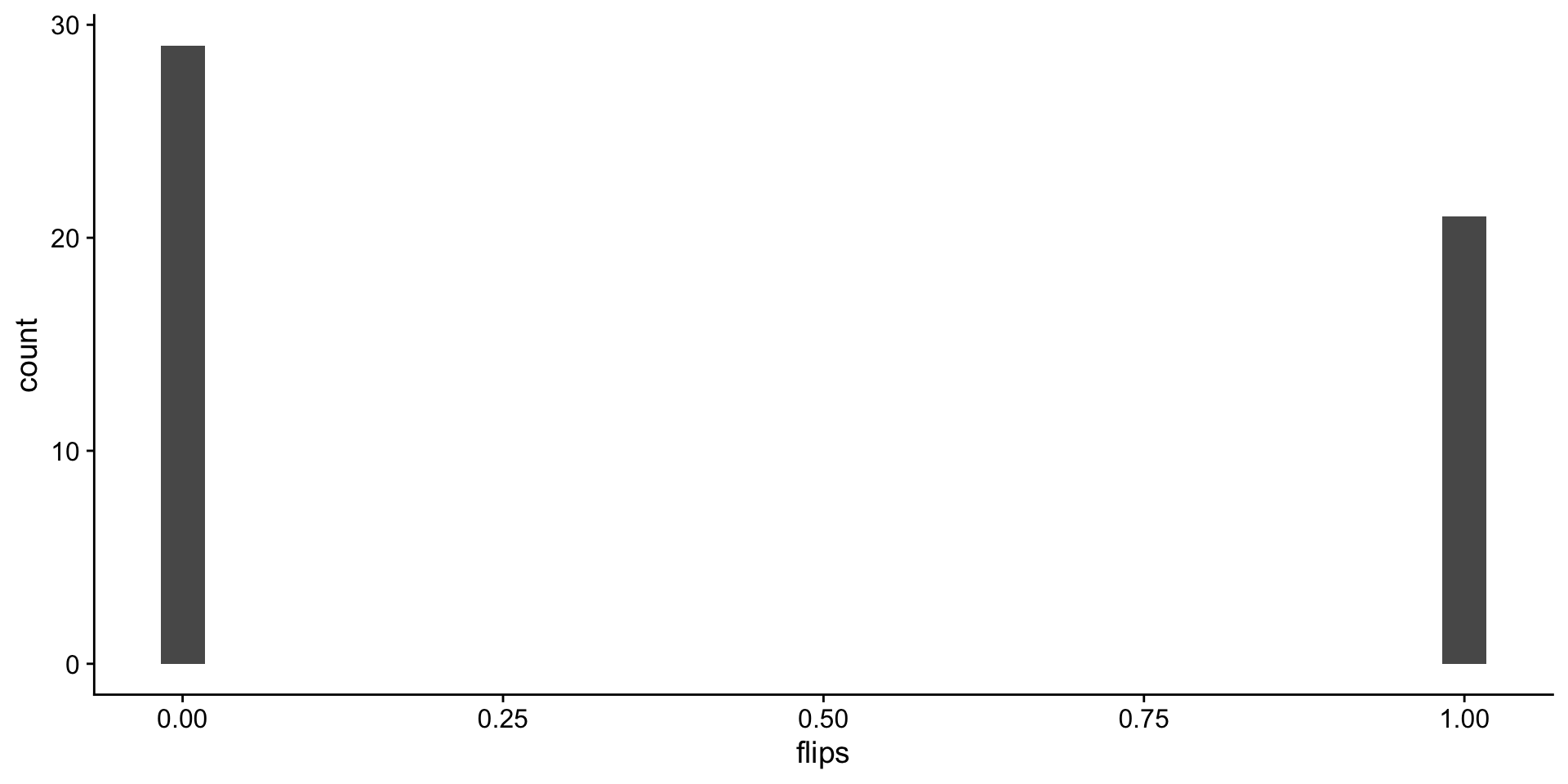

Back to coin flips!! 50 flips, one round.

flip distributions

Look at the distribution of fair flips

`stat_bin()` using `bins = 30`. Pick better value

`binwidth`.

Look at the distribution of unfair flips

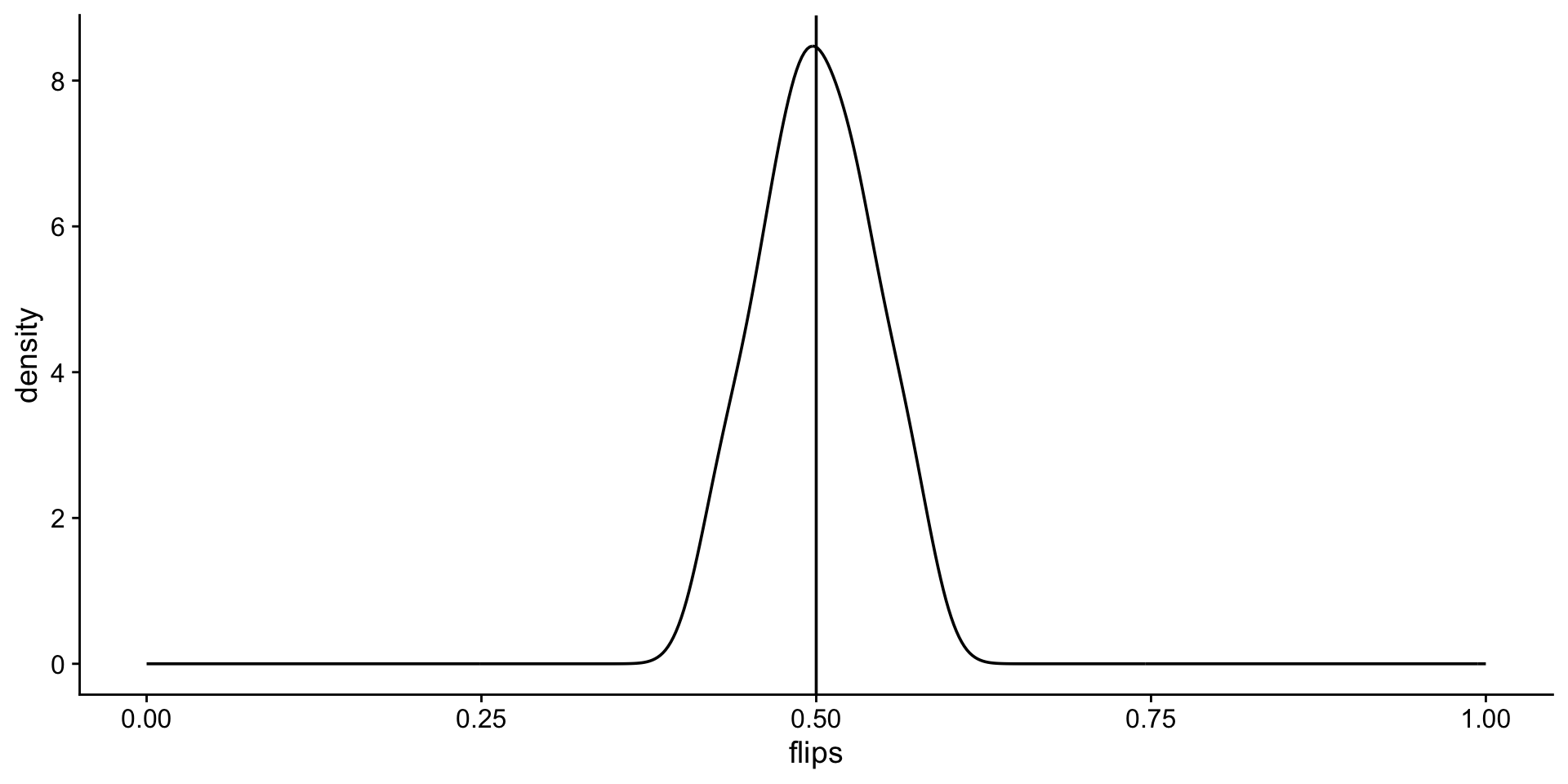

Now lets sample means

let’s do 100 round of 50 flips and take the average of each round.

remember the size that i told you to ignore last class!

[1] 0.52 0.47 0.41 0.49 0.51 0.45 0.56 0.50 0.52 0.46 0.46

[12] 0.52 0.51 0.46 0.47 0.49 0.54 0.58 0.44 0.45 0.49 0.46

[23] 0.61 0.52 0.55 0.52 0.47 0.49 0.45 0.42 0.44 0.43 0.52

[34] 0.51 0.48 0.49 0.55 0.48 0.62 0.50 0.52 0.47 0.42 0.51

[45] 0.50 0.48 0.52 0.44 0.54 0.56sampled flip mean distributions

Look at the distribution of fair flips

Look at the distribution of unfair flips

but is it normal?

# A tibble: 2 × 4

cheating variable statistic p

<chr> <chr> <dbl> <dbl>

1 fair flips 0.980 0.535

2 unfair flips 0.982 0.632yup!

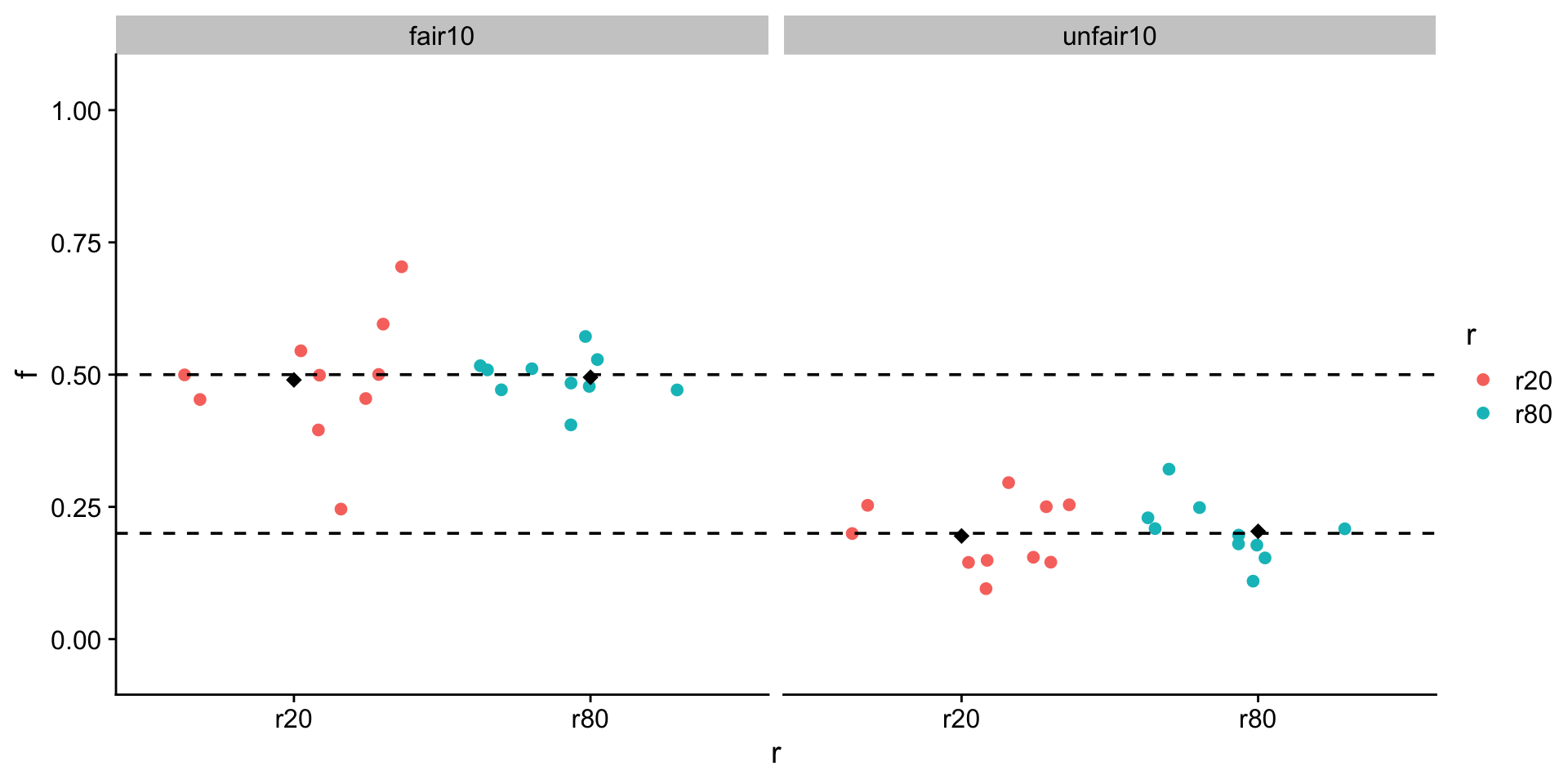

What about the mean + sd with different parameters?

10 fair and unfair flips

20 and 80 times

put it all together

Visualize

ggplot(all, aes(x = r, y = f, color = r)) +

geom_jitter() +

stat_summary(

fun.y = mean,

geom = "point",

shape = 18,

size = 3,

color = "black"

) +

ylim(-0.05, 1.05) +

facet_grid(~type) +

geom_hline(yintercept = .5, linetype = "dashed") +

geom_hline(yintercept = .2, linetype = "dashed") +

theme_cowplot()Warning: The `fun.y` argument of `stat_summary()` is deprecated as

of ggplot2 3.3.0.

ℹ Please use the `fun` argument instead.